TABLE OF CONTENTS

It’s a reasonable enough assumption to make that if one new graphics card (GPU) can speed up your work by X amount, two will do the job twice as fast, right?

Well, it depends.

Adding in all the GPUs you can afford into one computer for ultimate power might sound like a great idea that should be easy to do, but like many things, there’s a little more nuance to it than just slapping together some GPUs you’ve found and calling it a day.

What is SLI and how does it work?

SLI—or “Scalable Link Interface” —is a technology bought and developed by NVIDIA that allows you to link together multiple similar GPUs (up to four).

Image-Source: Teckknow

It allows you to theoretically use all of the GPUs together to complete certain computational tasks even faster without having to make a whole new computer for each GPU or wait for a new generational leap of GPU hardware.

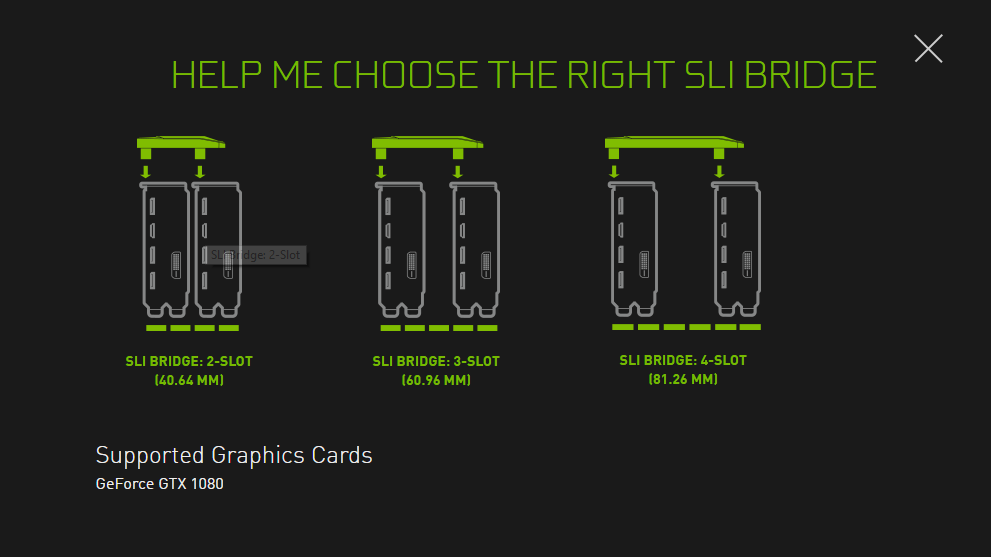

It works in a master-slave system wherein the “master” GPU (i.e. the topmost/first GPU in the computer, generally) “controls” and directs the “slaves” (i.e. the other GPU/s), who are connected using SLI bridges.

The “master” acts as a central hub to make sure that the “slaves” can communicate and accomplish whatever task is currently being performed in an efficient and stable manner.

Image-Source: Nvidia

The “master” then collects all of this scattered information and combines it into something that makes sense before sending it all to the monitor to be viewed—by you.

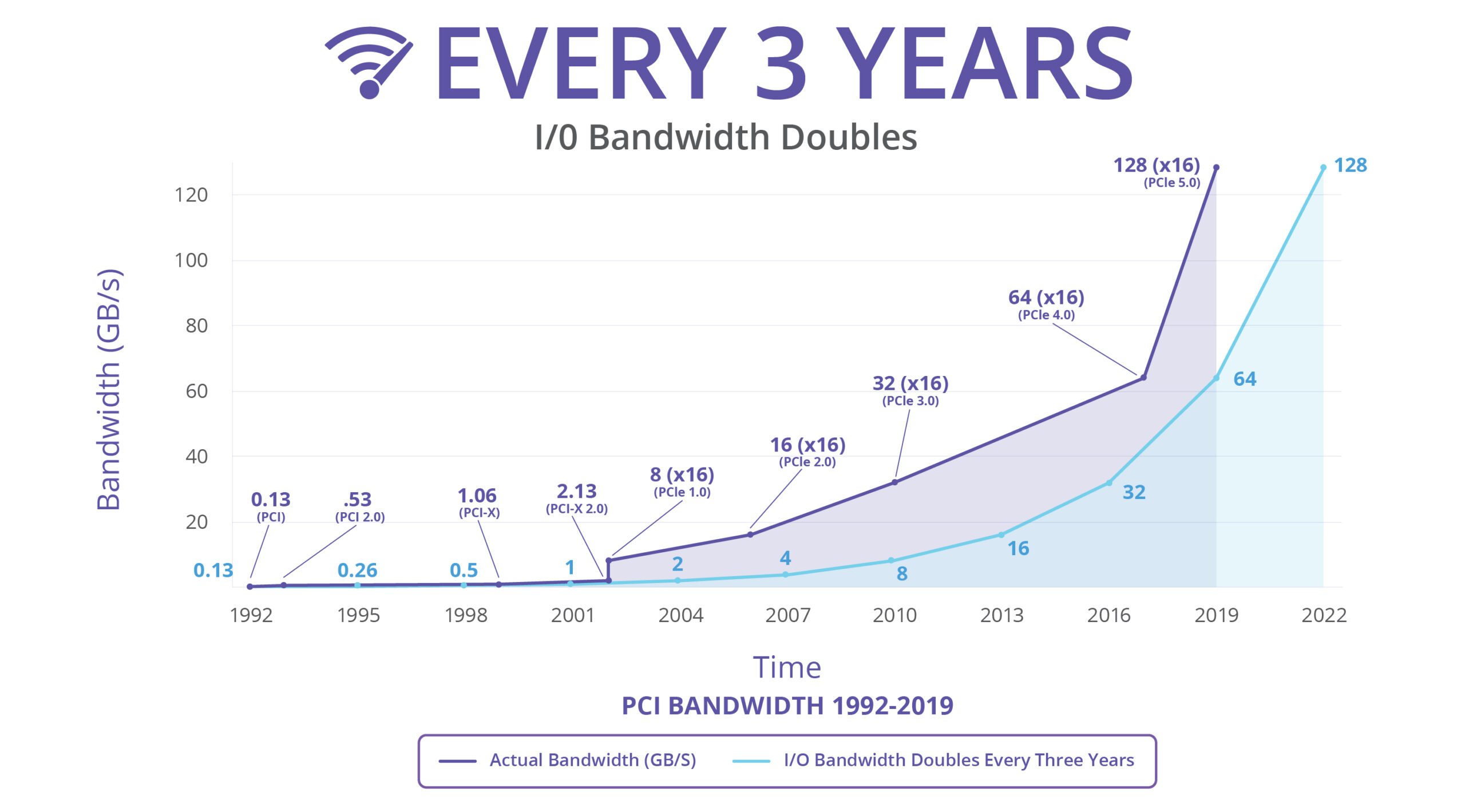

Now, you might be wondering why we even need an SLI bridge. Can’t we simply use the PCIe slot and PCIe lanes instead of having to fiddle about with these bridges?

Well, in theory, you can actually use them, but the problem with that is when you get to the higher/newer end of things, meaning anything that’s been made in the last 15 odd years, it isn’t enough.

A PCIe lane can only hold and send so much information. Up until very recently, If you try to combine GPUs only through PCIe lanes, you’ll quickly run into massive bandwidth bottlenecks to the point where it would’ve been easier and more performant to have just used a single GPU.

So, GPU manufacturers don’t allow this. Unless we’re talking about AMD, but that’s another matter entirely.

The point being, an SLI bridge tries to fix this. It’s a way of communicating between GPUs that circumvents the PCIe lanes and instead offloads that work directly onto the bridge.

Here’s an example:

When SLI was first released in ’04, the PCIe standard at the time (PCIe 1.0a) had a throughput of 250 MB/s or 0.250 GB/s. Whereas an SLI bridge had a throughput of around 1000 MB/s or 1 GB/s.

The difference is apparent.

Image-Credit: PCI-SIG [PDF]

Is SLI worth it?

Is SLI dead? Back in the days when you needed more than one GPU to be able to play the most demanding PC Games and PCIe Bandwidths were insufficient, SLI made sense.

Nowadays, GPUs have become so powerful, that it makes more sense to buy one powerful GPU than to hassle with all of the issues SLI brings to the table. Stuttering, bad Game support, extra heat, extra power consumption, more cables in the case, and worse value for the money. You don’t get 200% performance from two GPUs linked through SLI, it’s more like 150%.

In addition: Because so few Gamers invest in an SLI setup, there is almost no enticement for Game Developers to support it.

So, yes, SLI is dead and is being phased out by Nvidia.

What is NVLink?

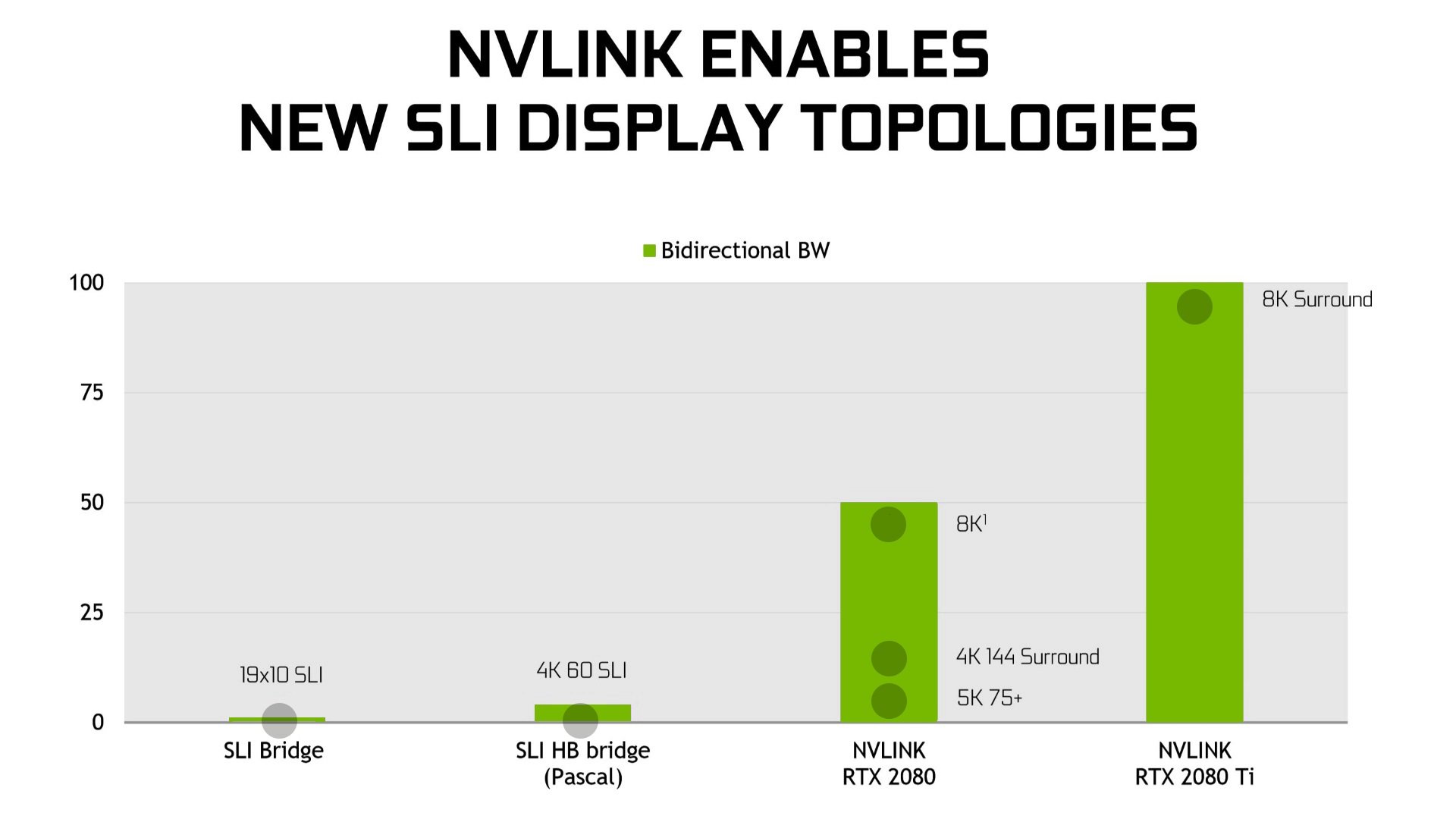

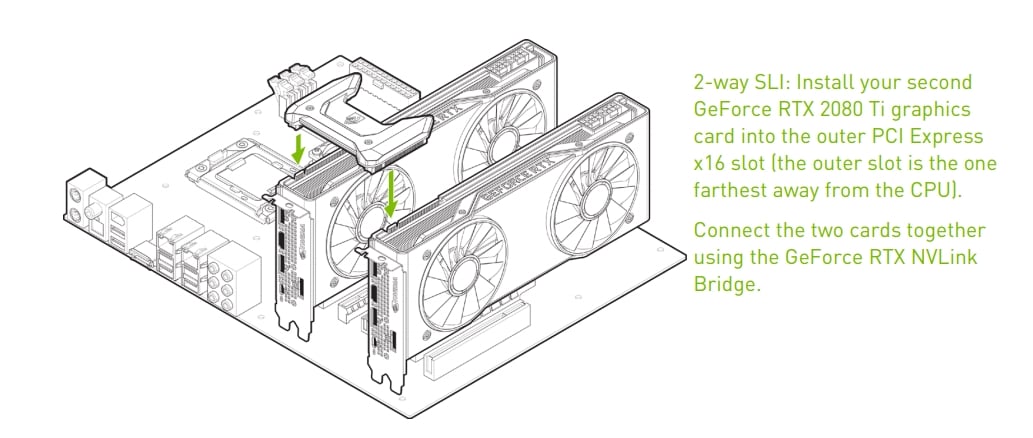

NVLink—in simple terms—is the bigger, badder, brother of SLI. It was originally exclusively for enterprise-grade GPUs but came to the general consumer market with the release of the RTX 2000 series of cards.

Image-Source: GPUMag

NVLink came about to fix a critical flaw of SLI, the bandwidth. SLI was fast, faster than PCIe at the time, but not fast enough, even with the high-bandwidth bridges that NVIDIA released later on. The GPUs could work together efficiently enough, yes, but that’s about all they could do with what SLI offered.

Here’s another example:

When NVLink was introduced, NVIDIA’s own high-bandwidth SLI bridge could handle up to 2000 MB/s or 2 GB/s, and the PCIe Standard at the time (PCIe 3.0) could handle 985 MB/s or 0.985 GB/s.

NVLink on the other hand? It could handle speeds of up to 300000 MB/s or 300 GB/s or more in some enterprise configurations and around 100000 MB/s or 100 GB/s in the more general consumer configurations.

Image-Source: Nvidia

The difference is even more apparent.

So with SLI, it was less that you were using multiple GPUs as one, and more that you were using multiple GPUs together and calling it one.

What’s the difference?

Latency. Yes, you can combine GPUs with SLI, but it still took a non-inconsequential amount of time for them to send information across, which isn’t ideal.

That’s where NVLink comes in to save the day.

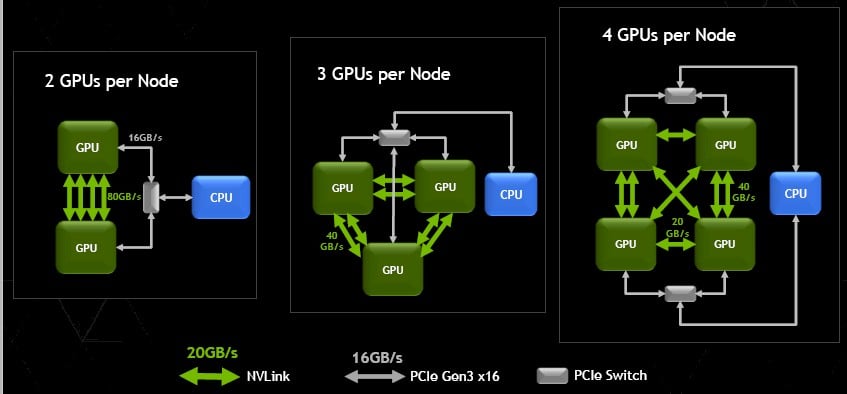

NVLink did a good ol’ Abe Lincoln and did away with that master-slave system we talked about in favor of something called mesh networking.

Image-Source: Nvidia

In Mesh Networking every GPU is independent and can talk with the CPU and every other GPU directly.

This—along with the larger number of pins and newer signaling protocol—gives NVLink far lower latency and allows it to pool together resources like the VRAM in order to do far more complex calculations.

Image-Source: Nvidia

Something that SLI couldn’t do.

Drawbacks of NVLink

NVLink is still not a magical switch that you can turn on to gain more performance.

NVLink is great in some use cases, but not all.

Are you rendering complex scenes with millions of polygons? Or Editing videos and trying to scrub through RAW 12K footage? Simulating complex molecular structures?

These will all benefit greatly from 2 or more GPUs connected through NVLink (If the Software supports this feature!).

Image-Source: Nvidia

But trying to run Cyberpunk 2077 on Ultra at 8K? Sorry, but sadly that isn’t going to work. And if it does, it’ll work badly. At least with the current state of things.

NVLink just didn’t take off as most had hoped. Even nowadays, multi-GPU gaming setups are prone to a host of problems that make it hard to even get the games running, and if you do, you’ll most likely be plagued by micro stuttering.

Not to mention that developer support for multi-GPU setups range from “meh” to non-existent.

Is NVLINK worth it?

Productivity and enterprise use cases for the most part.

You can still run some select games on multiple GPUs, but the experience is not optimal, to say the least. You might get higher performance at the highest percentile, but the overall performance is worse than if you just had a strong, single GPU.

Do you need SLI or NVLink for GPU Rendering?

We talked about productivity, and a large part of that is Rendering. Many 3D Render Engines such as Redshift, Octane, or Blender’s Cycles utilize your GPU(s) for this.

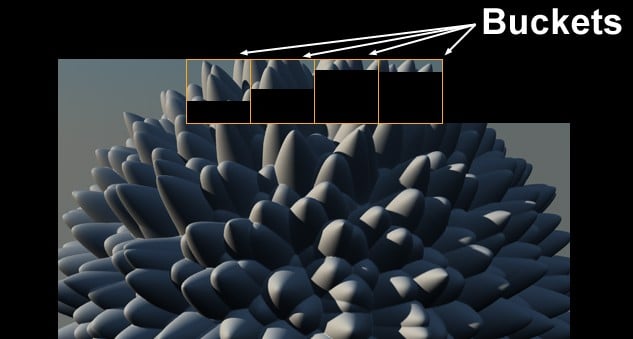

The great thing about GPU Rendering is, you won’t need SLI or NVLink to make use of multiple GPUs. If your GPUs are not linked in any way, the Render Engine will just upload your Scene Data to each of your GPUs and all of them will render a part of your image.

You’ll usually notice this when you count the number of Render Buckets which usually correlate to the number of GPUs in your system.

Although none of the available GPU Render Engines require GPU Linking some can make use of NVLink.

When you connect two GPUs through NVLink, their memory (VRAM) is shared and select Render Engines such as Redshift or Octane can make use of this feature.

In most scenarios, you will see no performance difference and your rendertimes will stay the same. If you work on highly complex scenes, though, that require a higher amount of GPU Memory than your individual GPUs offer, you’ll likely see some speedups when using NVLink.

In my experience of working in the 3D-Industry for the past 15 years, though, in most cases, you will not need NVLink and it’s usually a hassle to set up.

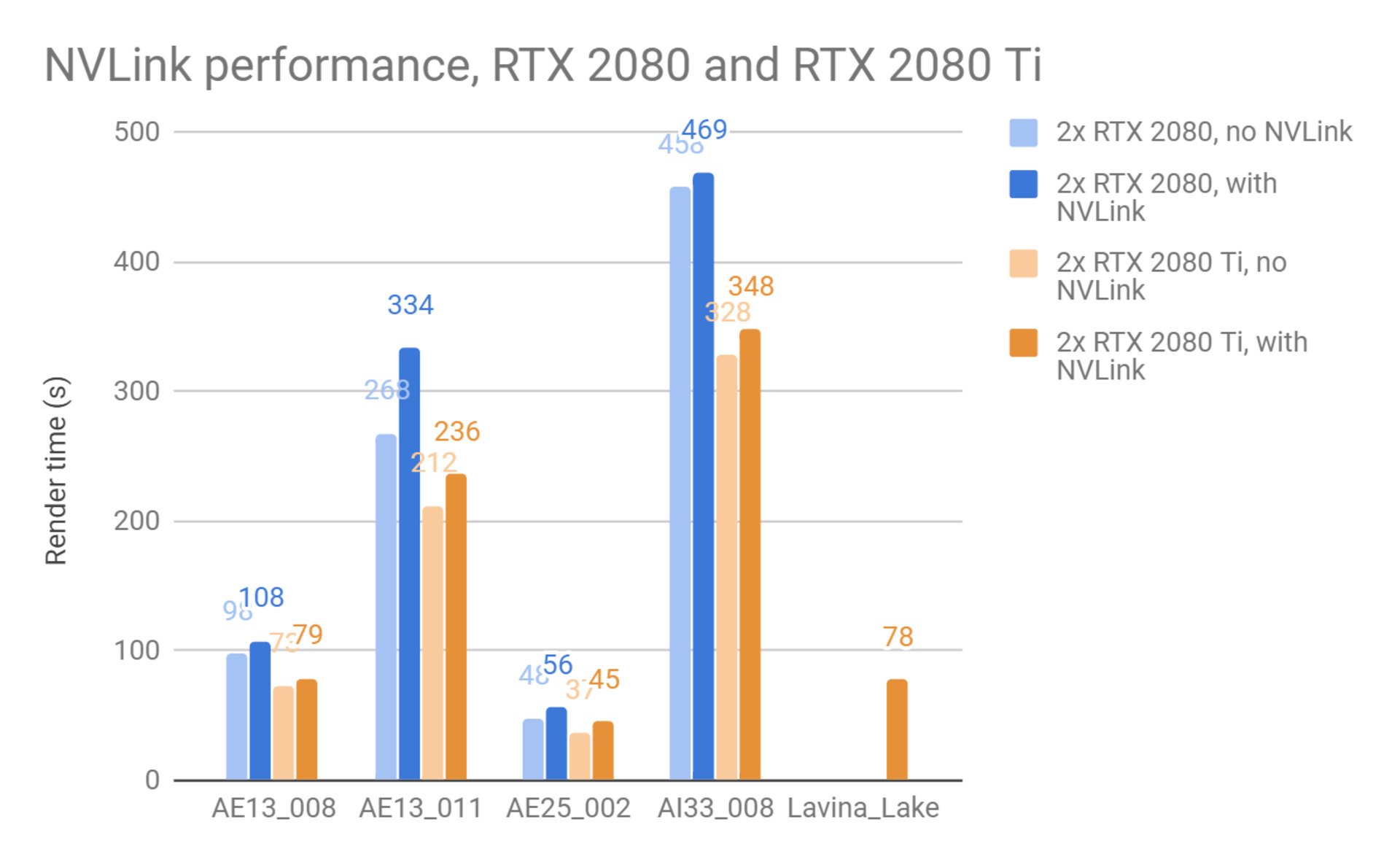

GPU Rendering NVLink Benchmark Comparison

Let’s take a look at some Benchmarks. Chaos group, the company behind the popular Render Engine V-Ray, has done some extensive testing and comparisons of rendering with and without NVLink.

Image-Source: Chaosgroup

Both, two RTX 2080 Tis, and two RTX 2080s have been tested in rendering five V-Ray Scenes of different complexity.

4 of the five scenes see a decrease in render performance with NVLink enabled. The reason is simple: Although the VRAM is pooled together, enabling NVLink requires additional resources to be managed, which has to be deducted from render performance.

The first 4 Scenes also don’t need more VRAM than any individual GPU has available.

The 5th Scene (Lavina_Lake), though, could only be rendered on 2x 2080 Tis with NVLink enabled (combined VRAM-capacity: 22GB). This is because the Scene was so complex, it needed more VRAM than a single GPU could offer – and more than 2x 2080 connected through NVLink were able to offer (combined VRAM-capacity: 16GB).

As you can see, NVLink is only practical in rare cases when your projects are too complex to fit into the available VRAM of your individual GPUs.

FAQ

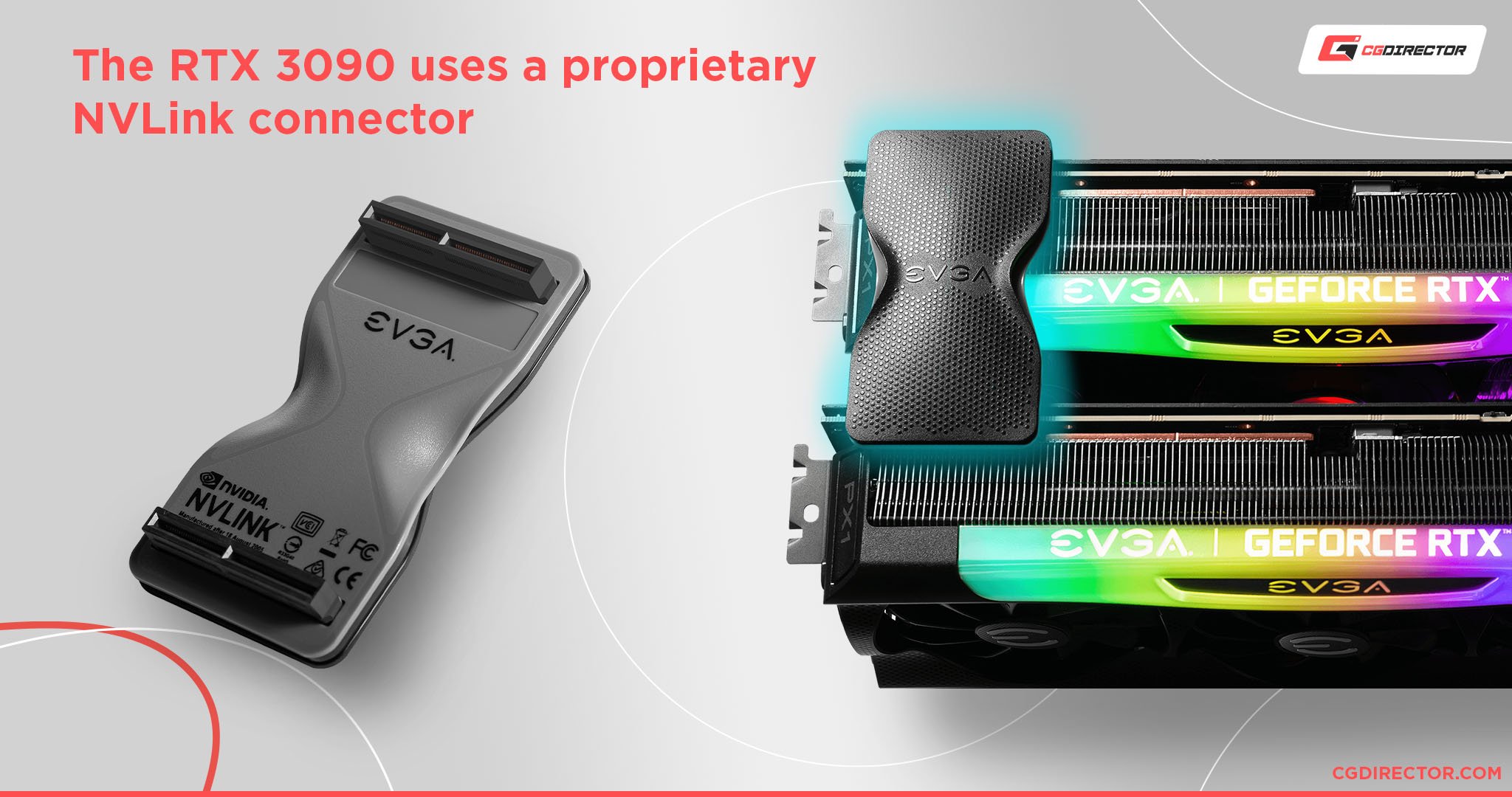

Can I use my SLI bridge that I have to get NVLink?

Nope. NVLink is a whole new standard with a whole new connector. You’ll have to buy one to use NVLink sadly.

How many cards can I link using SLI/NVLink?

- With SLI, it’s up to four cards.

- With NVLink, for consumers, it’s two. It’s up to 16 or more even in some enterprise setups.

- Why is SLI/NVLink so bad in games?

- Mainly game implementation and micro stuttering. Both of those could potentially be fixed if given enough development time, but there’s just no great incentive to optimize something that only ~1% of people will use.

Can I connect together different types of cards with SLI/NVLink?

- When SLI was still supported, you couldn’t link together different cards. A GTX 1080, for example, has to go with another GTX 1080—the manufacturer doesn’t matter for the most part.

- With NVLink, it’s a bit more complicated. There are some consumer GPUs that support being linked with enterprise-grade GPUs, but the support is spotty at best.

- Basically, get two matching cards and you’ll be golden.

Is SLI dead?

As good as, yes.

Do you need a special motherboard for NVLink?

No. Just 2 or more GPUs that support NVLink and an NVLink bridge.

Can you use AMD and NVIDIA GPUs using NVLink?

No. But there have been attempts at using GPUs from both manufacturers together in the past.

Can the RTX 3060, 3070, 3080, 3090, etc use NVLink?

Only the RTX 3090 supports NVLink currently.

Do I have to use NVLink for multiple GPUs?

- Not really. For productivity purposes such as rendering, video editing, and other GPU bound applications like that, you can use two GPUs independently without having them pool their resources and work together. (E.g. GPU rendering in Redshift or Octane works great without NVLink)

- Matter of fact, this type of setup is quite beneficial for some special circumstances where, for example, you might want to have one dedicated GPU set aside to render something while you use the other GPU to do other work instead of having to give away control of your PC to the rendering gods while it did its thing.

What should I keep in mind if I decide to get another GPU?

- You should make sure that your motherboard has enough PCIe slots and that those slots have sufficient PCIe bandwidth to not bottleneck your additional GPU.

- If you have anything other than an ultra-cheap, ultra-small motherboard, you should be fine. Still, doesn’t hurt to check.

- You should make sure that you have a strong enough power supply that can actually support both GPUs.

- You should make sure that your case even has enough space for another GPU. They’re getting real big these days.

- And finally, you should make sure to buy an NVLink bridge with your second GPU as well.

That’s it from me. What are you using NVLink or SLI for? Let me know in the comments!

![Guide to Undervolting your GPU [Step by Step] Guide to Undervolting your GPU [Step by Step]](https://www.cgdirector.com/wp-content/uploads/media/2024/04/Guide-to-Undervolting-your-GPU-Twitter-594x335.jpg)

![Are Intel ARC GPUs Any Good? [2024 Update] Are Intel ARC GPUs Any Good? [2024 Update]](https://www.cgdirector.com/wp-content/uploads/media/2024/02/Are-Intel-ARC-GPUs-Any-Good-Twitter-594x335.jpg)

![Graphics Card (GPU) Not Detected [How to Fix] Graphics Card (GPU) Not Detected [How to Fix]](https://www.cgdirector.com/wp-content/uploads/media/2024/01/Graphics-Card-GPU-Not-Detected-CGDIRECTOR-Twitter-594x335.jpg)

13 Comments

27 May, 2023

What about the new Intel GPUs in 2023. Now with the drivers. Does Intel have a multi gpu instance? Even so, for the budget and specs as opposed to gaming: What would lets say two ARK 770s running side by side do? Is there a intel solution for that or are they just running by themselves alongside. Or What about, dare I say, AMD/Intel, or Intel/NVDIA

11 May, 2023

This was a helpful article. I recently got The Last of Us Part 1 for PC and my single ASUS ROG 2070 Super just isn’t cutting it. I can’t afford a 40 series right now, so I bought another used 2070 Super for dual-GPU for a slight performance boost while I save up to build a new rig. I can’t find my original GPU box so all I have is the SLI cable that came with my motherbaord (ASUS ROG Strix Gaming Z390-E). I hope that’ll do for now, but I’ve read elsewhere certain NVLink bridges are made for specific GPUs, so is there a specific NVLink bridge I need to buy or will any do?